Here is a test of Google's NotebookLM having a deep dive conversation about Intangible Medium…

Final Project Draft

These are the elements that will be used in my final project and this post serves as a final project draft.

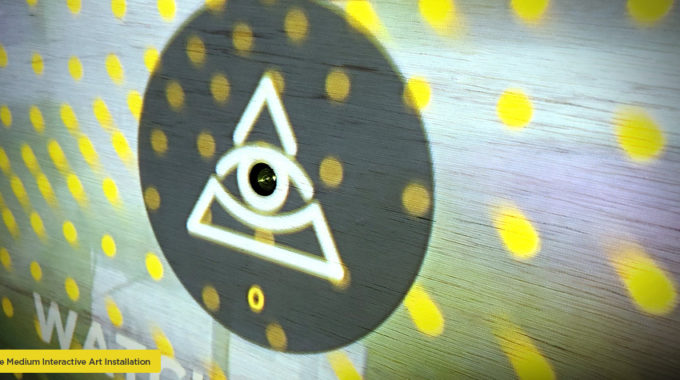

I coded the interactive visualization base in p5.js – this provided the visualization element that will be projected onto a wall or wood surface. It serves as the interactive “canvas”.

For demo purposes I added a p5.js sketch here on the site, so the user can experience the base visualization and it allows users to use their web cam to become part of the art. The project is made to be an interactive art installation that would require the user to be in the presence of the piece to interact with the sensors. This example will illustrate how the camera would function for the viewer on site, viewing the art installation. The example will ask users for access to their web cam so they can become part of the art.

Link to Interactive Project Demo Draft

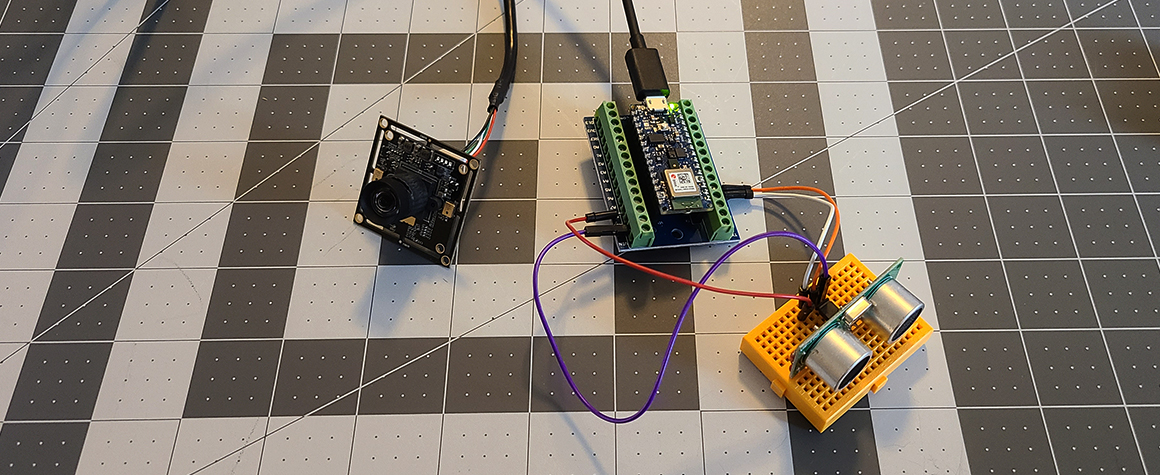

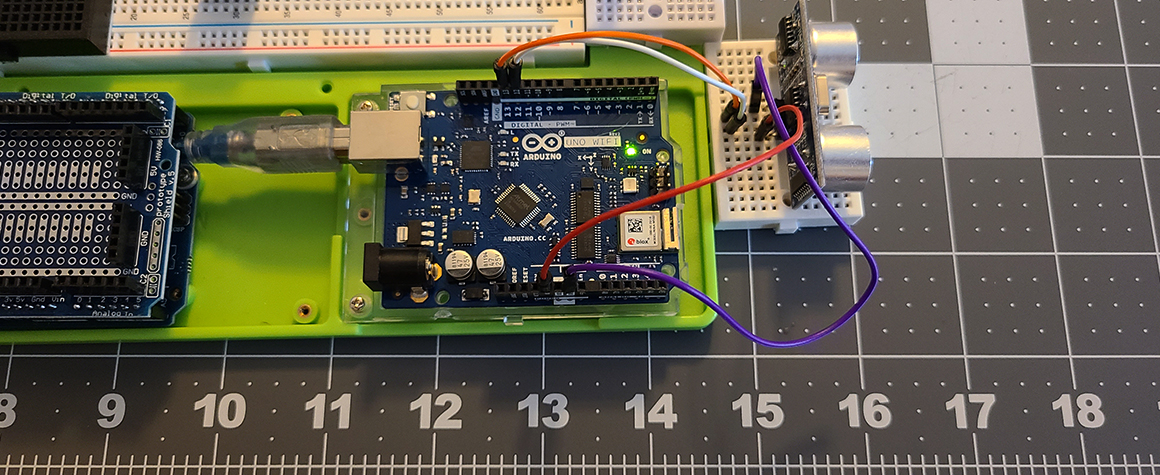

Here you will find photo documentation of the sensors and video camera module that will be integrated into the piece. I’ve been testing two microcontrollers as the base systems for the input devices.

One element I’ve been struggling with is incorporating a second camera element that would capture a photo of the person viewing the art installation and submitting it to an archive page on the website. I’ve tested a couple options but am having issues with them dynamically populate to the site. Back to work on that!

Questions for the draft viewers

What is your first impression when viewing the interactive element?

Did you test the camera function? If so, what feeling did it invoke?

If you chose not to try the camera function, how come?